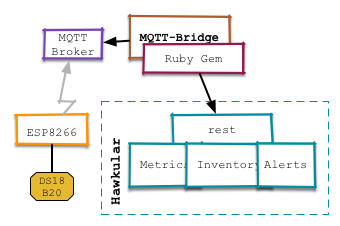

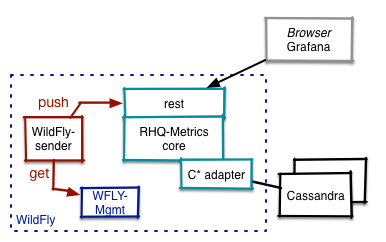

In the first post I have been talking about how send IoT sensor data to the metrics subsystem of Hawkular and then how to register the metric in Hawkular so that it can be graphed in the console.

In this article I will talk about how the Hawkular alerts component (that is already available in Hawkular-full) can be used to react on incoming data and make an LED on an Arduino blink.

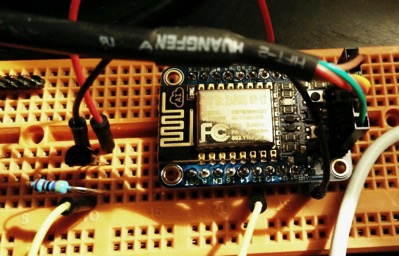

Arduino Uno with Ethernet shield and yellow LED

For the Arduino I have added a cheap Enc28j60 based Ethernet shield. There is a standard library available, that allows to easily set up a web server, which we are using (in fact the code is mostly from that example).

The new (full) setup is like this:

Setup with Arduino as actor

Alerting

Hawkular already has an alerting component built in, that allows to compare incoming values with thresholds and then invoke plugins to forward the fired alert via email, to irc channels and many more way of communication. The plugin we are going to use here is the webhook one. As in the standard Hawkular distribution only the email plugin is present we will need to install the webhook plugin first:

Check out Hawkular-alerts

git clone https://github.com/hawkular/hawkular-alerts.git

cd hawkular-alerts

Build hawkular alerts :

mvn -Pdev -DskipTests install

Now you can copy the plugin to the Hawkular-server

cd hawkular-alerts-actions-plugins

cd hawkular-alerts-actions-webhook

cp hawkular-alerts-actions-webhook.war \

$HAWKULAR/modules/system/layers/hawkular/org/hawkular/nest/main/deployments/

As Hawkular does not pick this up automatically you need to restart the Hawkular server after copying the webhook.war over.

Set up alerting

I have modified the ruby script to pull in a configuration file (in YAML format):

---

16617927:40.176.91.120.5.0.0.125:

:name: Living room

:alert:

:comparator: :gt

:value: 25

The first line is the id of the metric to which the following lines apply to. Second line is the display name for the UI. The next section then sets up alerting.

Let's have a look how this looks in code (you can see the full code on GitHub)

Register the webhook to be used below:

@webhook_props = { url: 'http://172.31.7.177/', # target server

method: 'POST' } # http verb

@alerts_client.create_action 'webhook', "send-via-webhook-#{metric_id}", @webhook_props

Set up a trigger and a condition, trigger first

t = Hawkular::Alerts::Trigger.new({})

t.enabled = true

t.id = "trigger_#{metric_id}"

t.tags = { :resourceId => "#{feed}/#{res_id}" }

The

tags tell the UI on which resource the Trigger applies and thus enables showing the alert in the Hawkular UI

Next the condition:

c = Hawkular::Alerts::Trigger::Condition.new({})

c.trigger_mode = :FIRING

c.type = :THRESHOLD

c.data_id = metric_id

c.operator = alert[:comparator].to_s.upcase

c.threshold = alert[:value]

And then finally as we do not only want the alert to show in the UI, but also be forwarded to our Arduino with the webhook, we need to attach the webhook to the Trigger. Plugin id is webhook and the action_id is what we have set up above.

# Reference an action definition

a = Hawkular::Alerts::Trigger::Action.new({})

a.action_plugin = 'webhook'

a.action_id = "send-via-webhook-#{metric_id}"

t.actions.push a

And then we can create the trigger, which will be active immediately.

@alerts_client.create_trigger t, [c], nil

Fire away

Now when a value above the threshold comes in, the webhook will be triggered, it will open a http connection to the Arduino and the LED will blink.

In addition the alert will also be shown in the Alert Center in the Hawkular UI:

List of high temperature alerts in the alert center.

More

Note that the above trigger definition is pretty simple and only has one comparator. Also the trigger fires each time a value above the threshold comes in. In a more real world scenario one would add some dampening that the trigger only fires when the measurement is a few times over the threshold for some interval.

Or if you start your air-conditioning, you would set the trigger to auto-disable and then have a comparator to switch it back on after the temperature has fallen below a certain level.

There is even a lot more you can do with Hawkular Alerts past the above, including standalone deployment for embedding it in your own application.

Some further reading:

Hawkular Alerts for Developers -- pretty comprehensive documentation of Hawkular Alerts.

Introduction to AutoResolve triggers

Using Hawkular Alerts as a standalone engine

I had the pleasure to be invited to present the Hawkular eco-system at

I had the pleasure to be invited to present the Hawkular eco-system at